AI-Powered Solutions Promise Personalized Sound and Direct Brain Implants for Hearing Disorders

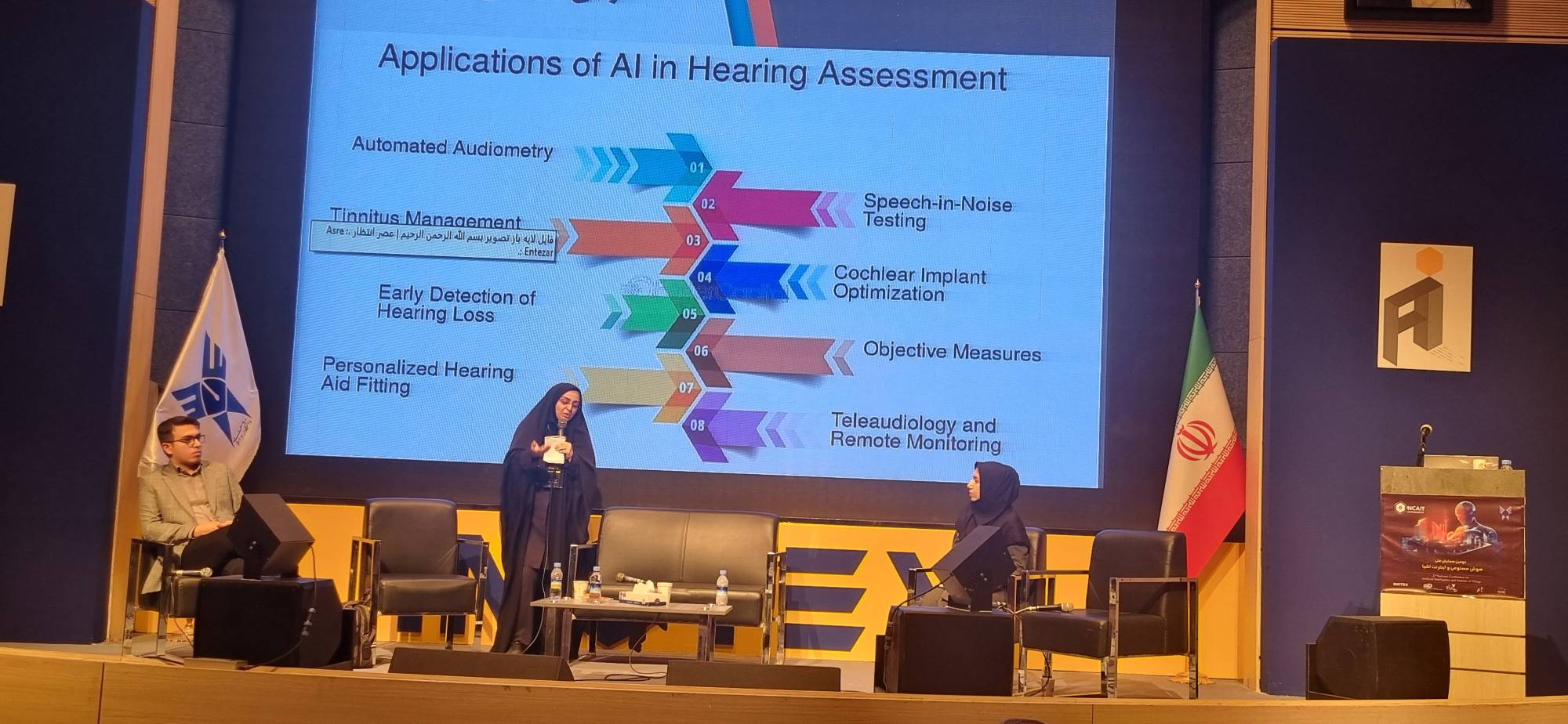

AI and Medicine Panel at INOTEX 2025: Revolutionizing Auditory Rehabilitation with AI – From Smart Hearing Aids to Brain Signal Processing

At the AI and Medicine panel during INOTEX 2025 on May 1, 2025, a faculty member from Shahid Beheshti University highlighted AI’s transformative potential in auditory rehabilitation, envisioning smart hearing aids and brain-connected implants as the future of treating hearing and speech disorders.

During the specialized panel on “Medicine and Its Integration with Artificial Intelligence” at INOTEX 2025, Elham Tavanaei, a faculty member of the Rehabilitation Faculty at Shahid Beheshti University of Medical Sciences, addressed the challenges in bridging technology and medical sciences. She noted, “In this panel, I realized that many colleagues have ideas but don’t know where to turn. This is unfortunate. I also have ideas that I’m sharing here, hoping we can collaborate to bring them to fruition.”

Tavanaei, whose expertise lies in audiology, added, “In rehabilitation, we deal with sensory, motor, or cognitive disorders, and it’s essential to use modern tools for their recovery.”

Discussing auditory approaches in rehabilitating the hearing-impaired, she explained, “Instead of focusing on sign language, we aim to optimize sound and process auditory signals. Our goal is to enhance the sound quality of hearing aids and cochlear implants using AI, delivering a sound closer to natural hearing for users.”

She continued, “Currently, many hearing aid users are dissatisfied with sound quality because their auditory cells are damaged, and mere sound amplification isn’t enough. We need AI to generate sounds that damaged cells can no longer perceive. In the future, hearing aids might even be designed to filter out specific voices or play music instead of disruptive noises.”

Tavanaei also proposed innovative ideas such as attaching cameras to hearing aids for lip-reading, using sound-tracking systems, and even replacing hearing aids with brain implants. She stated, “In the future, chips placed in the auditory cortex of the brain could enable hearing without the need for hearing aids.”

Addressing the risks of using earbuds and headphones, she suggested, “Sensors should be designed to warn users of hearing loss or when sound levels reach dangerous thresholds. We need self-adjusting hearing aids that can be remotely tuned by therapists via the internet, without in-person visits.”

Emphasizing the role of big data and machine learning in diagnosing hearing disorders, the Shahid Beheshti faculty member noted, “Differential diagnosis for some disorders is very challenging. AI-driven analysis of big data can clarify the boundaries between these conditions.”

Tavanaei also highlighted the use of virtual reality in treating conditions like tinnitus and hyperacusis, stating, “Combined therapies involving psychology, sound therapy, and virtual reality can be effective in a calming environment.”

On motor rehabilitation and speech therapy, she added, “In physiotherapy, sensors can help individuals detect movement errors or record gait disorders. In speech therapy, by measuring brain signals and converting them into sound, we can enable those who have lost their ability to speak to communicate again.”

Quick Access

Address: Pardis Technology Park, 20th km of Damavand Road (Main Stresst), Tehran I.R. Iran.

Postal Code: 1657163871

Tel: 76250250 _ 021

Fax: 76250100 _ 021

E-mail: info@techpark.ir

website:iiid.tech